LangChain - Intro

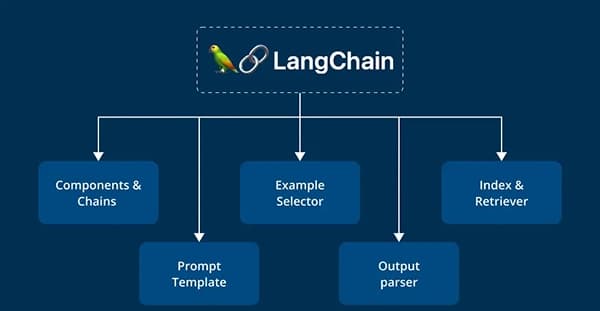

Imagine having a virtual assistant that not only understands your commands but also learns from your interactions, creating personalized responses. Or envision a customer support chatbot that can handle complex queries with ease, delivering accurate and context-aware answers. This is the power of LangChain.

Create the Project Directory

First, create the project directory and navigate into it:

mkdir langchain-tutorial

cd langchain-tutorial

Initialize the project with a package.json file:

npm init -y

This will generate a default package.json file. You will replace its content with the provided one in the next step.

Install TypeScript and the necessary types for Node.js:

npm install --save-dev typescript @types/node

Install ts-node and nodemon for Development. These tools help you run TypeScript files directly and automatically restart the server when files change:

npm install --save-dev ts-node nodemon

Install Express, a popular Node.js web framework, and its TypeScript definitions:

npm install express

npm install --save-dev @types/express

Install the langchain package and the OpenAI integration:

npm install langchain @langchain/openai

Install dotenv for environment variable management:

npm install dotenv

Create a tsconfig.json file to configure TypeScript:

npx tsc --init

Create the necessary directories and a basic index.ts file:

mkdir src

touch src/index.ts

Chain Invoke

This code sets up and runs a simple LangChain-based application that generates a joke using the OpenAI GPT-3.5-turbo model. It demonstrates how to:

- Load environment variables.

- Create and configure an OpenAI language model.

- Define a prompt template with a placeholder.

- Create a processing chain by piping the prompt into the model.

- Invoke the chain with a specific input and log the generated response.

// src/index.ts

import { ChatOpenAI } from "@langchain/openai";

import * as dotenv from 'dotenv'

import { ChatPromptTemplate } from "@langchain/core/prompts"

dotenv.config()

async function main() {

// Create model

const model = new ChatOpenAI({

openAIApiKey: process.env.OPENAI_API_KEY!,

modelName: 'gpt-3.5-turbo',

temperature: 0.7

});

const prompt = ChatPromptTemplate.fromTemplate("You are a comedian. Tell a joke based on the following word {input}");

// Create chain

const chain = prompt.pipe(model)

// Call chain

const response = await chain.invoke({

input: "cat"

})

console.log(response)

}

main().catch(console.error);

- openAIApiKey: This gets the OpenAI API key from environment variables.

- modelName: Specifies the model to use (in this case, gpt-3.5-turbo).

- temperature: Sets the creativity of the responses. A value of 0.7 means moderately creative responses.

- Create Chain:The prompt template is piped into the model using the pipe method. This creates a processing chain where the formatted prompt is directly fed into the language model.

- Invoke Chain:The chain is called (invoked) with the input "cat". This means the input word "cat" will replace the {input} placeholder in the prompt template. The formatted prompt is then processed by the model to generate a response.

- Run main Function:The main function is called and any errors are caught and logged to the console using console.error.

Chain Stream

// src/index.ts

import { ChatOpenAI } from "@langchain/openai";

import dotenv from 'dotenv';

dotenv.config();

const model = new ChatOpenAI({

openAIApiKey: process.env.OPENAI_API_KEY!

})

async function run() {

const response = await model.stream("Write a poem about AI")

//console.log(response)

for await (const chunk of response) {

console.log(chunk?.content)

}

}

run().catch(console.error)

- async function run(): This defines an asynchronous function named run. Using async allows you to use await within the function to handle asynchronous operations.

- const response = await model.stream("Write a poem about AI"):

- model.stream(...): This method sends a prompt ("Write a poem about AI") to the OpenAI model and returns a stream of responses. A stream is a way of handling data that is being received over time, rather than all at once.

- await:The await keyword is used to pause the execution of the function until the stream operation is complete.

- Invoke Chain:The chain is called (invoked) with the input "cat". This means the input word "cat" will replace the {input} placeholder in the prompt template. The formatted prompt is then processed by the model to generate a response.

- Run main Function:The main function is called and any errors are caught and logged to the console using console.error.

- for await (const chunk of response):This line iterates over each chunk of data received from the stream. The for await...of loop is specifically designed to handle asynchronous iteration, making it perfect for processing streamed data.

- chunk?.content:This extracts the content of each chunk. The optional chaining (?.) is used to safely access the content property, ensuring that the code does not throw an error if chunk is undefined or null.

This code snippet is a simple demonstration of how to interact with OpenAI's language models using the LangChain framework. It loads an API key from an environment variable, sends a prompt to the OpenAI model, and then streams the response back, printing each chunk of the response (in this case, a poem about AI) to the console in real-time. This approach is particularly useful for handling long responses or when you want to process data as it arrives rather than waiting for the entire response.

Chain Batch

// src/index.ts

import { ChatOpenAI } from "@langchain/openai";

import dotenv from 'dotenv';

dotenv.config();

const model = new ChatOpenAI({

openAIApiKey: process.env.OPENAI_API_KEY!

})

async function run() {

const response = await model.stream("Write a poem about AI")

//console.log(response)

for await (const chunk of response) {

console.log(chunk?.content)

}

}

run().catch(console.error)

- async function run(): This defines an asynchronous function named run. The async keyword allows the function to use the await keyword, which is used to wait for asynchronous operations to complete.

- model.batch(["Hello", "How are you ?"]): The batch method sends multiple inputs (in this case, ["Hello", "How are you ?"]) to the language model at once and returns their responses. It's used for processing multiple prompts efficiently in one go.

- await: The await keyword is used to pause the execution of the run function until the batch method returns a response. This makes sure the code doesn't move on to the next line until the responses are received.

This code is a simple example of how to use the LangChain library with OpenAI's language models. It imports necessary modules, loads environment variables, creates an instance of the ChatOpenAI class with an API key, and sends a batch of prompts to the model, printing out the responses.

Additional Methods

In LangChain, the primary methods we've mentioned — model.invoke, model.batch, and model.stream — are used to interact with models in different ways. These methods are typically associated with how you can execute or call models in a synchronous, batch, or streaming fashion. Besides these, there are other methods or utilities you might encounter, depending on the specific model or service you're using. Some of these methods or patterns include:

- model.generate: This method is commonly used to generate text from a prompt, often in a way that allows for more specific configuration of the output, such as controlling temperature, max tokens, etc.

- model.chat: If the model supports chat-based interactions (like OpenAI's chat models), this method allows you to have a conversational exchange with the model. It typically involves a sequence of messages rather than a single prompt.

- model.generate: The async version of model.generate, allowing for asynchronous generation of text.

- model.async_invoke: Similar to invoke, but allows for asynchronous invocation of the model, useful in environments where you need non-blocking execution.

- model.async_batch: The asynchronous version of batch, allowing you to process multiple inputs concurrently.

- model.async_stream:The asynchronous version of stream, useful for streaming output in real-time without blocking other tasks.

- model.map_reduce: A pattern often used in larger pipelines, where the output from one invocation of a model is mapped, processed, and then reduced or aggregated.

- model.apply: Sometimes used to apply a model to a series of inputs in a functional style, often with some additional transformations or operations in between.

- model.piping: While not always a method, the concept of piping or chaining models together in a sequence is supported in LangChain through various utilities and helper functions.

- model.predict:A general-purpose method used in some APIs to get predictions from a model, which could be text generation, classification, etc.

These methods and patterns can vary depending on the specific implementation and the backend service or model you're working with. LangChain is designed to be modular and extensible, so the exact methods available can differ based on your setup and the specific models or providers you're using.